A few years ago, in the times before machine learning & smart bidding, ads AB testing was simple (or at least we thought so); you would take an ad copy focusing on price & confront it with an ad copy that puts free delivery in the center. The free delivery ad would get more purchases, have better CVR, lower CPA (of course, everybody loves free delivery) & eventually you would keep the Free delivery ad active, pause the other one & call it a day.

With machine learning & responsive search ads takeover (RSA further in the text), AB ads testing has slightly changed. When creating RSA you are able to put up to 15 headlines & up to 4 descriptions for one ad (more details about RSA can be found here). This means that only one created RSA can be shown in 43680 combinations. Having in mind the previous about RSA, empowered by machine learning & user data, Google should figure out which ad copy works the best for each user & serve the ad accordingly; even though Free delivery copy might be a better fit for a majority of users & have better results, some users might convert better with price copy, while others might like Storytelling copy better.

Having the previous said in mind, the question is at what scale is it possible to do ads AB testing?

Getting back to the introduction example, after you figure out that free delivery ad performs better than a price ad, traditionally you would pause the price ad, giving the free delivery ad more impressions to capture in order to promote your overall results. What could theoretically happen in this situation is that even if you empower only the better ad (free delivery ad), you might lose some incremental conversions that you gain with a worse performing ad (price ad).

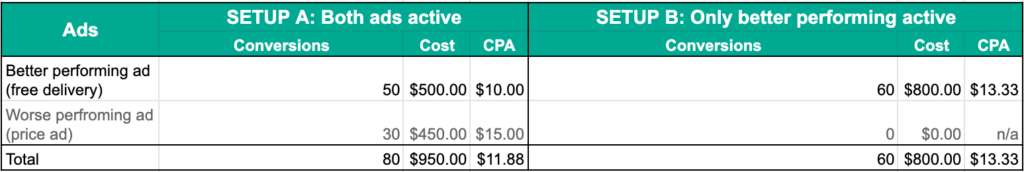

Let’s take a look at the fictional example. We have 2 setups. In SETUP A both ads are active, the free delivery ad gets 50 conversions (CPA of 10$), while the price ad gets 30 conversions (CPA of 15$). You might be thinking that it is definitely worth it to pause the price ad (higher CPA / less conversions) & push the free delivery ad to boost the overall result. Before doing this it would be smart to validate this thought experiment. Basically, we are confronting two setups in an experiment:

SETUP A – both ads active

SETUP B – only better performing ad active

You might be expecting that in SETUP B all 30 conversions for price ad (paused in this setup) will be shifted to free delivery ad with favorable CPA. But what actually happens? Only 10 conversions are shifted to the ad with higher CPA than in the original SETUP A. Overall we ended up with less conversions & higher CPA.

Why could something like this happen?

Even though the price ad has higher CPA & less conversions in the SETUP A, there might be users who are triggered better by the price ad than the free delivery ad. Thus, when they see the only free delivery ad, only a portion of them will convert anyway, while others might decide to check other searching engine results.

Within the office, we conducted several similar experiments through different clients & regardless of the industry we always ended up with similar results. Pushing only the best performing ad or leaving out the worst performing results brought us overall less favorable results than having all ads included (regardless of their performance).

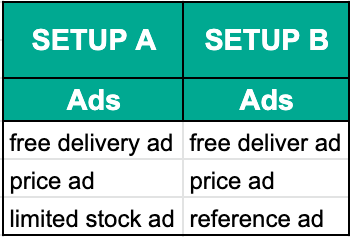

So, how to do ads A/B testing in times of machine learning? We could have seen from the example that the old-fashioned way might not be the best option. Before excluding worse performing ads, it would be wise at least to test the hypothesis presented above. In other words, having as many ads as possible might be the best approach. This doesn’t necessarily mean that your A/B testing stops here. You can keep searching for better ad combinations. How? In cases where an advertising platform has a limit on the number of active ads (it’s hard to believe there is one that supports an unlimited number of active ads), you might be A/B testing ads setups. Let’s say that platform supports a maximum of 3 active ads. You might like to check if the combination of 3 ads (SETUP A in the image) gives better results than a slightly different ad combination (SETUP B in the image).

Happy testing!